Using Cognos Connection Jobs for Orchestration (with Cognos Enterprise Planning and Controller in the pit)

Most of the systems we implement don’t live in vacuum, they have to communicate with lots of neighbours. People often overlook the ability to call stored procedures from Cognos BI reports as a method of running some action, so I’d like to steal your 5 minutes for explaining how to use it to build better systems.

Let’s imagine a very common scenario: when budget data is “ready” it needs to be transferred to some other system. When I have a process that so user driven (it takes a real financial analyst to estimate budget “readiness”, just looking at submission states doesn’t work), I try to make it’s execution as simple as clicking a link on a web page. Nexting multiple screens and copying text files around is out of question.

If it’s a major project with a designated ETL tool, I try to implement all required workflow in ETL and provide users with an ability to run ETL packages by pressing links. I usually put all these urls in Cognos Connection to ensure that there’s a single entry point for whole system.

But sometimes there’s no ELT tool around and in that cases Cognos Connection itself may be enough.

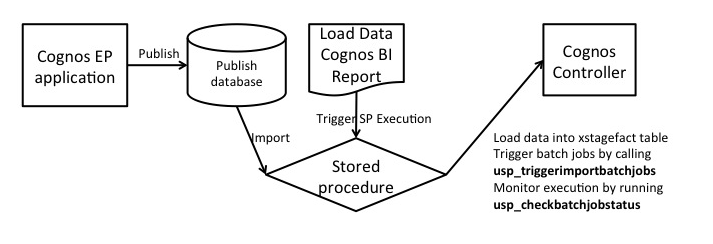

In a recent project I had to make up a Cognos Enterprise Planning data into Cognos Controller import flow. Each part of the equation may be substituted with something else in this example, logic stays the same: we use Cognos Connection to define the workflow.

In this instance Cognos Connection job would:

-

run Incremental Publish job to get EP data into readable format

-

run a stored procedure call to load data into Controller. To run a stored procedure you add it as a Query subject in Framework Manager and then running any report on this QS will trigger stored procedure execution

-

(optional) send “all done” report to group of users

For users it’s just a link that they can run directly or schedule for later. And you get Cognos Connection jobs security settings, run history and scheduling capabilities for free )

Bonus: Generalised controller import stored procedure, MS SQL Server

ALTER PROCEDURE [dbo].[usp_LoadEPDataIntoController] as

-- Importing published Cognos EP data into controller staging table and running import batch

-- Created:

-- Author:

-- Last updated:

-- Update details:

-- Cleaning up staging table just in case

truncate table cognos_10_controller_database.fastnet.xstagefact;

-- Inserting published data

insert into cognos_10_controller_database.fastnet.xstagefact

(st_id, st_period, st_actuality, st_account, st_company, st_amount,st_tranamount)

select

'ep_Load' + convert(varchar(8), getdate(), 112) +' '+ convert(varchar(8), getdate(), 108) st_id,

-- your data here

;

-- getting currently generated import spec

declare @max_st_id nvarchar(30);

set @max_st_id = (select MAX(st_id) from cognos_10_controller_database.fastnet.xstagefact);

-- running Controller import batch job

exec cognos_10_controller_database.fastnet.usp_triggerimportbatchjobs @max_st_id, 'CONTROLLER_IMPORT_SPEC_NAME', 'D', '', 'USER', 1,''

-- returning result of batch run

declare @batchResult as int;

declare @currentBatchId as int;

set @currentBatchId = (select MAX(batch_id) from cognos_10_controller_database.fastnet.xstagefact);

exec @batchResult= cognos_10_controller_database.fastnet.usp_checkbatchjobstatus @currentBatchId, 1

select

'Finished loading EP data' as message,

@batchResult as batch_result,

case

when @batchResult = 0 then 'The batch ID was not found.'

when @batchResult = 3 then 'The batch job was completed successfully.'

when @batchResult = 3 then 'The batch job was completed successfully.'

when @batchResult = 4 then 'The batch job was completed with errors.'

when @batchResult = 5 then 'The batch job was set to the ON HOLD status.'

when @batchResult = -1000 then 'The number of minutes to pause must be between 1 and 59.'

end as batch_result_message