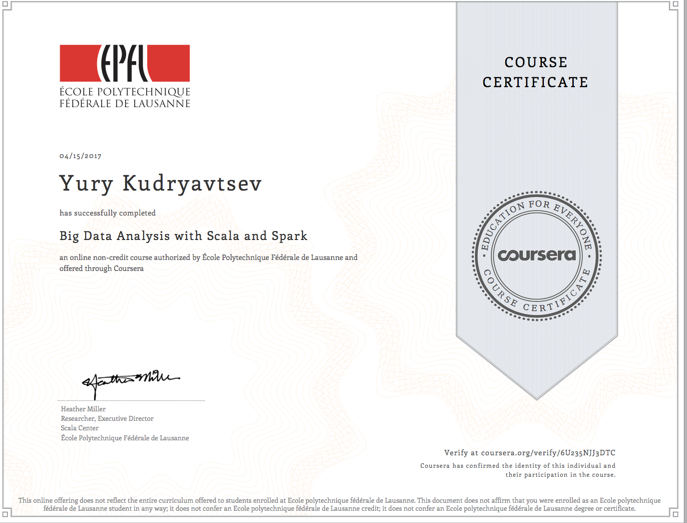

Coursera: Big Data Analysis with Scala and Spark

Work has been quite intense and very high-level recently, so I was missing the state of flow and the builders high that accompany coding. And I thought it’s a good time to learn something new and since I’ve been reading heaps of good things about Apache Spark, a course on it sounded like a worthwhile pursuit. With that in mind, I enrolled in Big Data Analysis with Scala and Spark and thoroughly enjoyed the experience.

It turned out to be an outstanding course, Heather Miller did an excellent job fitting a lot about Spark architecture, processing and SparkSQL, an impressive variety of content during the short timeframe. Loved the coding assignments, they were all real-world based and fascinating to do.

Spark itself is breath-taking, I’m impressed by the performance, RDD architecture and the expressive power of Scala, it’s something way better suited to a lot of data-oriented tasks than Map-Reduce.

On a side note, I enjoyed reading the Spark research papers, found it a better architectural reference than any of the books I was reading. And it turns out even the sight of the 2 column layout with footnotes brings back all the -happy- PhD memories :)

There were a couple of things I didn’t take into account when signing up:

- This course is the 4th course in 5-course Scala specialisation. I never wrote anything in Scala before, and my last foray into functional programming was doing Scheme back at uni. Maybe it wasn’t so smart to try to learn two new things at the same time. A good lesson in humility.

- More importantly, I completely underestimated my work commitments over the course period; I even ended up going overseas a couple of times (China and Singapore) during the course. Funny enough, those 8+hours flights are priceless for coding. It was a real challenge to meet the deadlines, and I was a week late in the end.

All in all great experience, I still have two books about Spark to finish up, and then I’ll need to do something more ‘real’ with Spark. And I’ll be back to the other courses in this specialisation once I get some breathing space.