How to implement a work queue in TM1

TM1 / Planning Analytics team is doing an impressive amount of work to make TIs run in parallel, however, the ‘grunt’ part of TurboIntegrator processes is still (as of 10.2.2 / PA 2) limited by one core. Even if you have a beefy server with multiple cores, they would only be utilised in ‘preparing source view with MTQ’ stage and all Metadata / Data will be single threaded. A common way to speed things up is to split the work into independent chunks and run those in parallel via tm1runti. Sometimes you can get close to the linear increase in performance improvement and it can be worth a try.

Just before you embark on this ‘parallelisation’ journey, check whether you can speed things up using more ‘standard’ mechanisms (i.e. read aggregated data, make sure that your code is optimal, etc). My rule of thumb is that a well-done TI processes ~10k cells a second a single core. If you’re in this ballpark, say allocating 100k target cells in a minute, you’re good. If you’re processing 100k target cells in a few hours – there’s something wrong in what you’re doing and there are greater gains in reviewing your logic before throwing more hardware at it.

There are a couple main challenges to run things in parallel:

Data partitioning

You need to select subsets of data that can be processed independently in parallel. This depends on what you’re doing, but it is usually possible to find at least one dimension that can be used for splitting the work. Time dimension is sometimes an easy candidate for this.

Processing in parallel and monitoring the results

This is something that is quite awkward to build in TM1, as there are no built-in thread control mechanisms and it’s exactly what this whole post is about :)

Let’s say we would be splitting a large allocation TI into multiple chunks (by month) and running them in parallel.

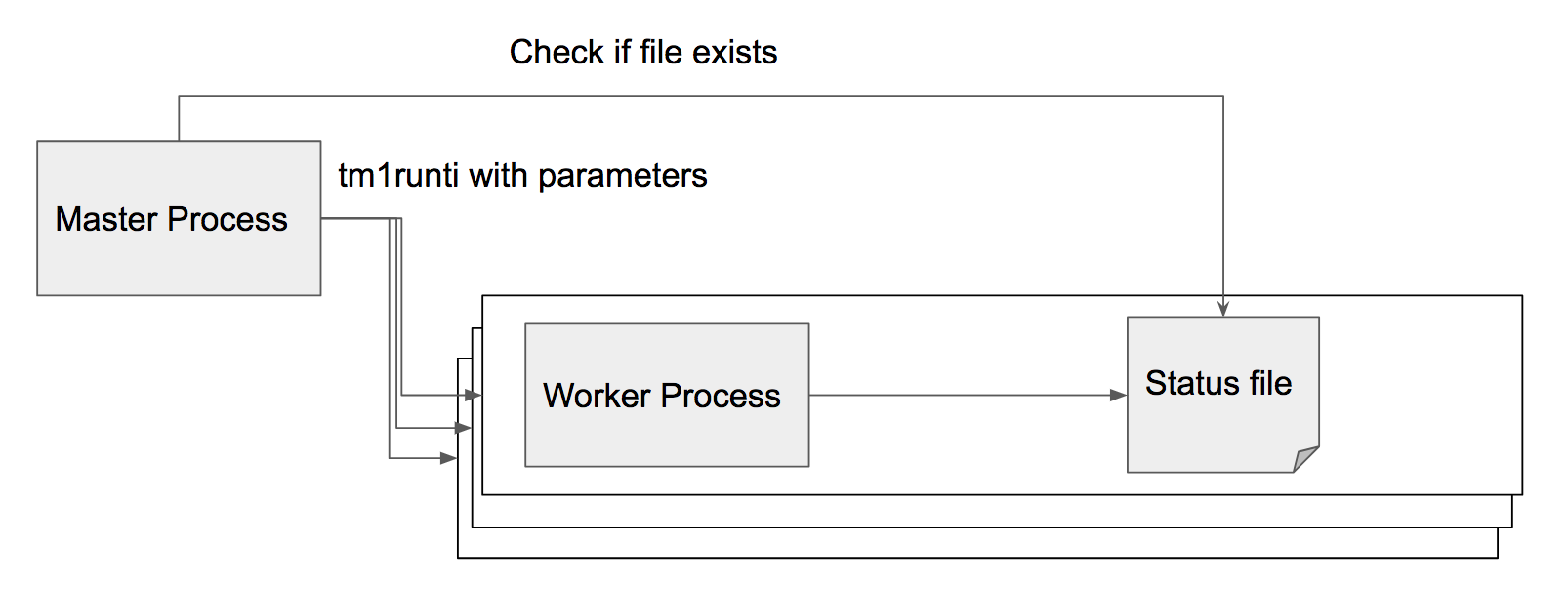

We would create 2 TIs:

- Worker process, for example, Allocate Data by Month (pMonth as a parameter, statusFile as a parameter):

this process will do all the heavy lifting of allocating data, but source view for it will be limited by a single month that we pass as a parameter

- Control process, for example, Allocate Data

this process will check how many months we want to run in parallel (I like to make this a system parameter) and will run tm1runti calls to Allocate Data by Month with required months. Running tm1runti is an asynchronous call ( ExecuteCommand with 0 Wait parameter), so we need to know when each allocation process is complete to launch the next one.

I use files to monitor execution of Allocate Data by Month worker processes by making sure that worker process will write up a status flag file to a temporary folder on startup and completion (something along the lines of Allocate\_Data\_July.Started, Allocate_Data.July.Complete)

Allocate Data control process then becomes a quite sophisticated while loop:

- Every x seconds check whether there are any months in the queue. A queue is generally just a string of all elements you’re processing at the moment, something like

Jan, Feb, Mar, Aprfor a queue of 4.- For each month in the queue, check whether there is a .Complete file or a .Failure file using a FileExists call. If there is, say we got Feb.Complete, we remove it from the queue string and decrease the number of currently executed elements

- Check whether the execution for a particular month has hung. For detecting hang execution you need some assumptions, i.e. ‘shouldn’t take longer than 5 minutes per month’. If it has, remove it from the queue and log an error.

- If there are months to process, we need to check the number of currently executing elements vs max number of elements in the queue. If we have space in the queue, we launch Allocate Data by Month for next month, add month name to queue string and increase the currently executing elements count.

- If we run out of elements to process and queue is empty: we’re done, we clean up the temporary files and report back to the user what happened.

And that’s it, just a bunch of files in a folder + a quite fancy TI to ‘control’ execution and you can be splitting TIs left, right and centre.

For some extra points and cookies:

- try to make status files as unique as possible with things like

TIMST ( NOW , '\Y\m\d\h\i\s' )_NumberToString( INT( RAND( ) * 100000 ))added to file names to avoid any conflicts - make each ‘worker’ process write a detailed log and combine them for end-user

- I sometimes try to detect hanged execution state by checking modification time of another file I’m ASCIIOUTPUTing from worker process a line to every 10k records